Beyond Chat: Building AI With Eyes on NVIDIA DGX Spark

How I trained a Reachy humanoid robot to see, understand, and interact with the physical world using NVIDIA DGX Spark at home. This isn't just chat anymore—it's embodied AI that truly perceives reality beyond 1s and 0s.

Beyond Chat: Building AI With Eyes on NVIDIA DGX Spark

We’ve all become familiar with AI assistants that can chat, write code, and answer questions. But what happens when AI gets eyes? When it can see your hand gestures, recognize objects on your desk, understand the environment around you, and respond to the physical world in real-time?

This isn’t science fiction. This is what I built at home using a Reachy humanoid robot platform and NVIDIA’s DGX Spark system.

The Vision: AI That Sees Beyond 1s and 0s

For too long, we’ve thought of AI as purely digital—processing text, generating responses, manipulating data structures. But the real world isn’t made of tokens and embeddings. It’s made of physical objects, spatial relationships, gestures, and visual context.

When I started this project, I wanted to answer a fundamental question: Can I build an AI system at home that truly understands the physical world?

Not just “upload an image and get a description” like cloud APIs offer. I wanted:

- Real-time vision processing that responds instantly to what it sees

- Privacy-first architecture where images never leave my local network

- Multi-modal intelligence that combines vision, language, memory, and reasoning

- Production-ready performance that handles complex queries in seconds, not minutes

The result? A personal assistant system running entirely on NVIDIA DGX Spark that can:

- Count fingers you’re holding up

- Describe objects in detail

- Recognize clothing and scenery

- Validate what it sees against real-world data

- Remember conversations and personal preferences

- Route queries intelligently to the right models

Let me show you how it works.

The Hardware: NVIDIA DGX Spark at Home

First, the foundation. The NVIDIA DGX Spark is a compact AI workstation with a GB10 GPU running on ARM64 architecture. Unlike traditional x86 systems, this gave me:

Advantages:

- Power efficiency for always-on operation at home

- Local inference without cloud dependencies for vision tasks

- GPU acceleration for real-time processing

- Multi-model hosting across different frameworks simultaneously

The Stack:

- Hardware: NVIDIA DGX Spark (GB10 GPU, ARM64)

- Inference: NVIDIA NIM for Llama, Ollama for LLaVA vision

- Orchestration: NVIDIA NAT (Agent Toolkit) for routing

- Vector Database: ChromaDB for RAG memory

- Connectivity: Cloudflare Tunnel for secure remote access

Port Architecture:

| Port | Service | Purpose |

|---|---|---|

| 7860 | Pipecat Bot | WebRTC audio/video pipeline |

| 8001 | NAT Router | Query orchestration engine |

| 8003 | NVIDIA NIM | Llama 3.1 8B local inference |

| 11434 | Ollama | LLaVA 13B vision + embeddings |

This multi-service architecture lets me run different models optimized for different tasks, all coordinated through a smart routing layer.

The Brain: Smart 5-Route Architecture

Here’s where it gets interesting. Not every query needs the same model. Asking “What time is it?” shouldn’t use the same heavyweight reasoning engine as “Write me a Python script to analyze stock data.”

I built a 5-route classification system that automatically determines the best handler for each query:

Route 1: Chit-Chat (Local Llama 3.1 8B)

Use case: Greetings, small talk, casual conversation Speed: ~3 seconds Location: Local NIM inference

Simple, friendly responses don’t need cloud APIs. Local Llama handles these with low latency.

# router.py - Keyword detection

if any(word in query.lower() for word in ["hello", "hi", "thanks", "how are you"]):

return "chit_chat"Route 2: Complex Reasoning (Claude Sonnet 4.5)

Use case: Code generation, math, deep analysis Speed: ~5-10 seconds Location: Cloud API (Anthropic)

When you need serious reasoning power, cloud models still win. This route handles:

- Python/JavaScript code generation with working examples

- Multi-step problem solving with step-by-step explanations

- Algorithm design and debugging

- Complex technical questions

# router_agent.py - Complex reasoning handler

def _handle_complex_reasoning(self, query: str, **kwargs) -> dict:

system_prompt = """Provide thorough, accurate responses.

For coding: include working examples.

For math: show work step-by-step."""

response = self._call_openai_compatible(

prompt=query,

system=system_prompt,

config=self.llm_configs["reasoning_llm"]

)

return {"response": response, "model": "claude-sonnet-4-5"}Route 3: RAG Memory (ChromaDB + Local Embeddings)

Use case: Personal preferences, conversation history Speed: ~2-4 seconds Location: Local vector database

This is where it gets personal. The system maintains a knowledge base of:

- User preferences (favorite color, food, music)

- Conversation history with semantic search

- Important facts you’ve mentioned

- Document embeddings for context retrieval

Storage Strategy:

# rag_search.py - Automatic storage detection

if any(word in query.lower() for word in ["remember", "my name is"]):

# Store mode

self.rag.store_preference("name", extracted_name)

return {"response": f"I'll remember your name is {name}!"}

else:

# Retrieve mode

search_results = self.rag.search(query, n_results=5)

context_str = "\n".join([r["content"] for r in search_results])

response = self._call_ollama(

prompt=query,

system=f"RELEVANT KNOWLEDGE:\n{context_str}"

)Embedding Model: nomic-embed-text via Ollama (local, privacy-preserving)

Route 4: Image Understanding (LLaVA 13B)

Use case: Vision queries, camera input, “what do you see” Speed: ~5-8 seconds Location: Local Ollama

This is the magic. When the system detects visual input or vision keywords, it routes to LLaVA 13B—a local vision-language model that processes images entirely on the DGX Spark.

Trigger Detection:

# router.py - Vision routing

VISION_KEYWORDS = ["camera", "see", "describe", "look",

"what am i wearing", "how many fingers",

"what's this", "recognize"]

if image_present or any(kw in query.lower() for kw in VISION_KEYWORDS):

return "image_understanding"Route 5: Tools & Real-Time Data (Agent + Function Calling)

Use case: Weather, time, stocks, news, Wikipedia Speed: ~5-15 seconds Location: Local + External APIs

For real-world data, the system uses function calling with specialized tools:

# tools.py - Available tools

TOOLS = {

"get_current_time": self._timezone_aware_time,

"get_weather": self._fetch_weather_api,

"get_stock": self._fetch_stock_data,

"get_crypto": self._fetch_crypto_prices,

"get_news": self._fetch_news_headlines,

"search_wikipedia": self._semantic_wiki_search,

"web_search": self._general_web_search

}Timezone Awareness:

US_STATE_TIMEZONES = {

"texas": "America/Chicago",

"california": "America/Los_Angeles",

"new york": "America/New_York",

# ... 50+ mappings

}

def get_current_time(self) -> dict:

user_tz = self.get_user_timezone() # From RAG preferences

now = datetime.now(pytz.timezone(user_tz))

return {

"time": now.strftime("%I:%M %p"),

"day": now.strftime("%A"),

"date": now.strftime("%B %d, %Y")

}The Eyes: Vision Capabilities in Action

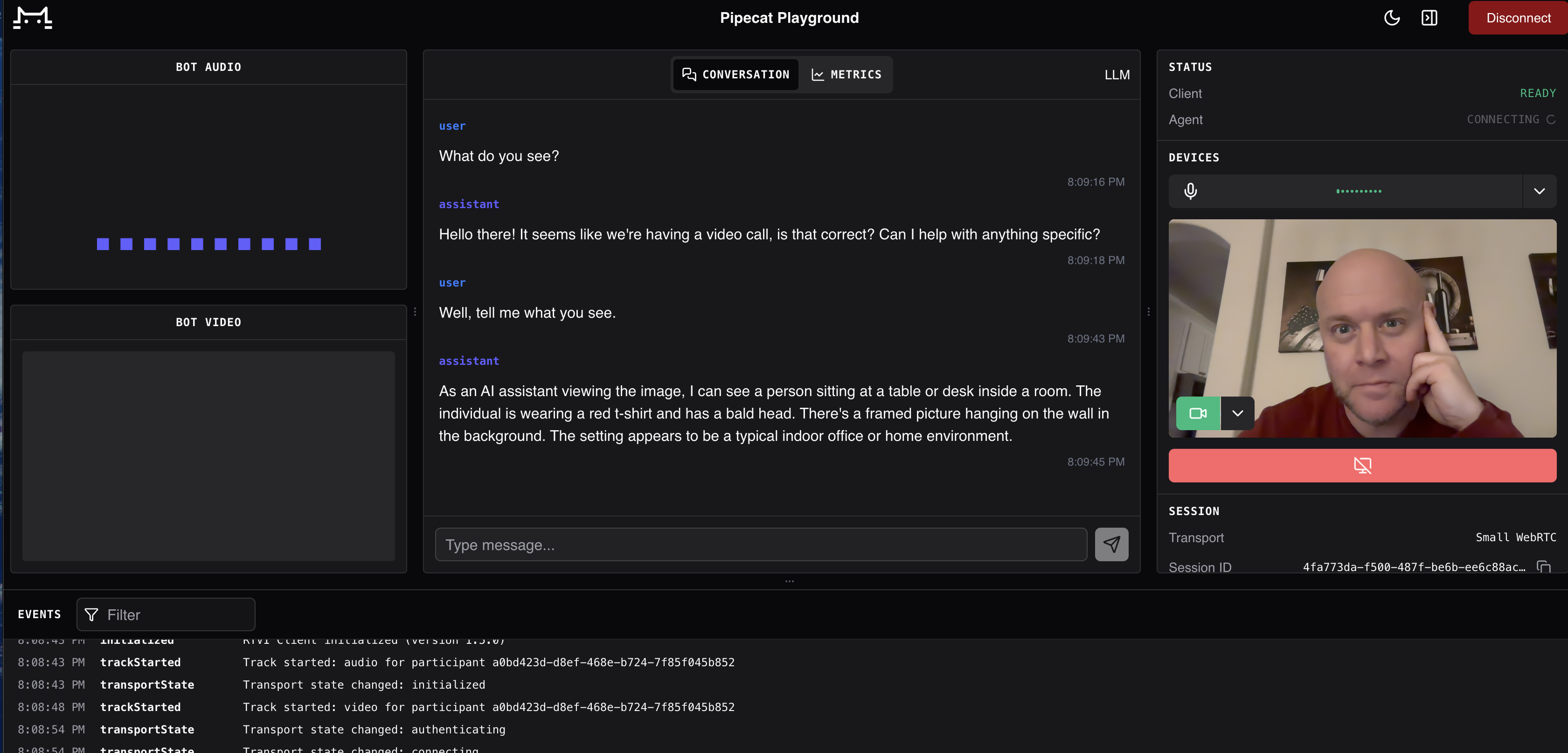

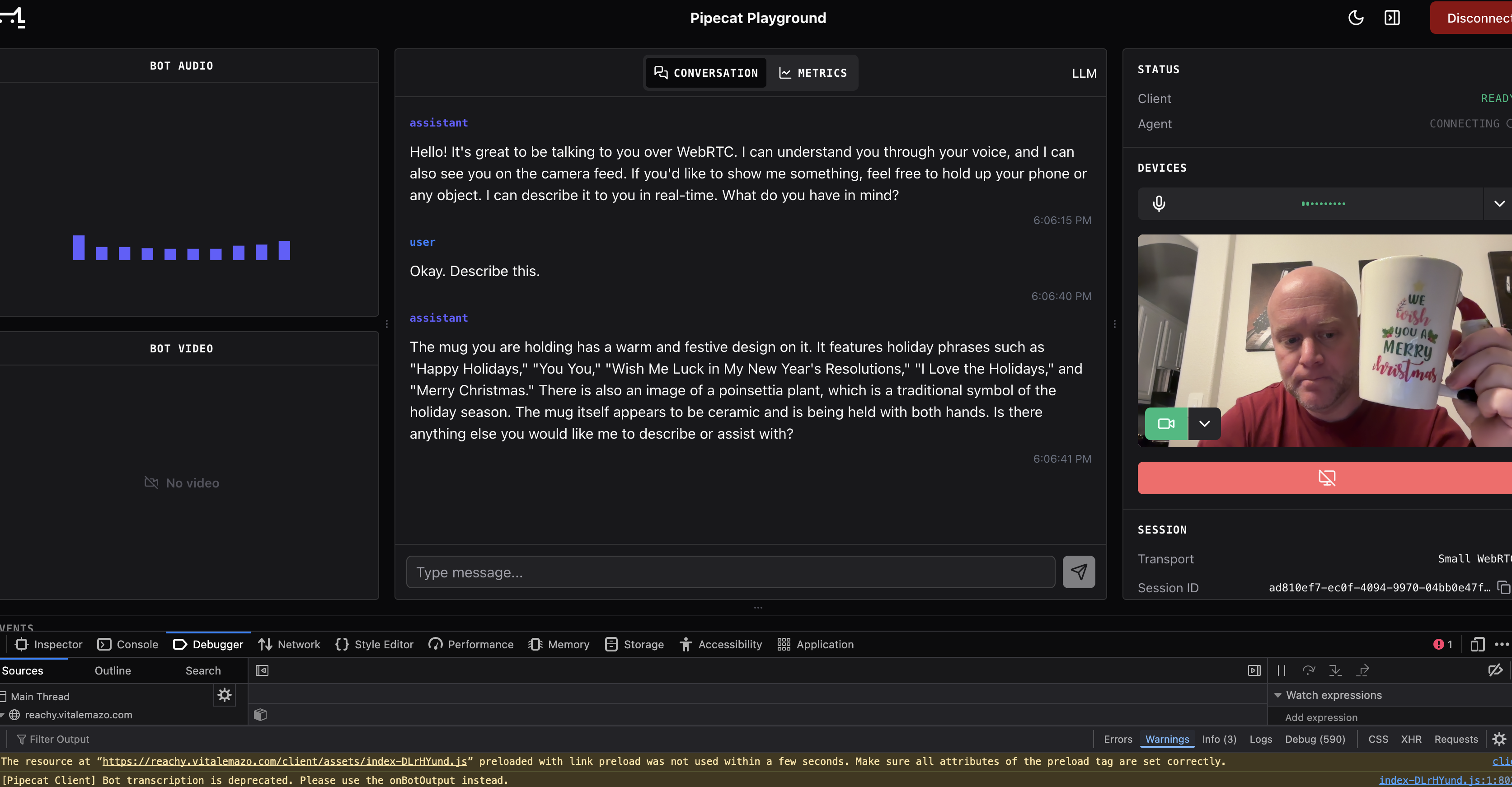

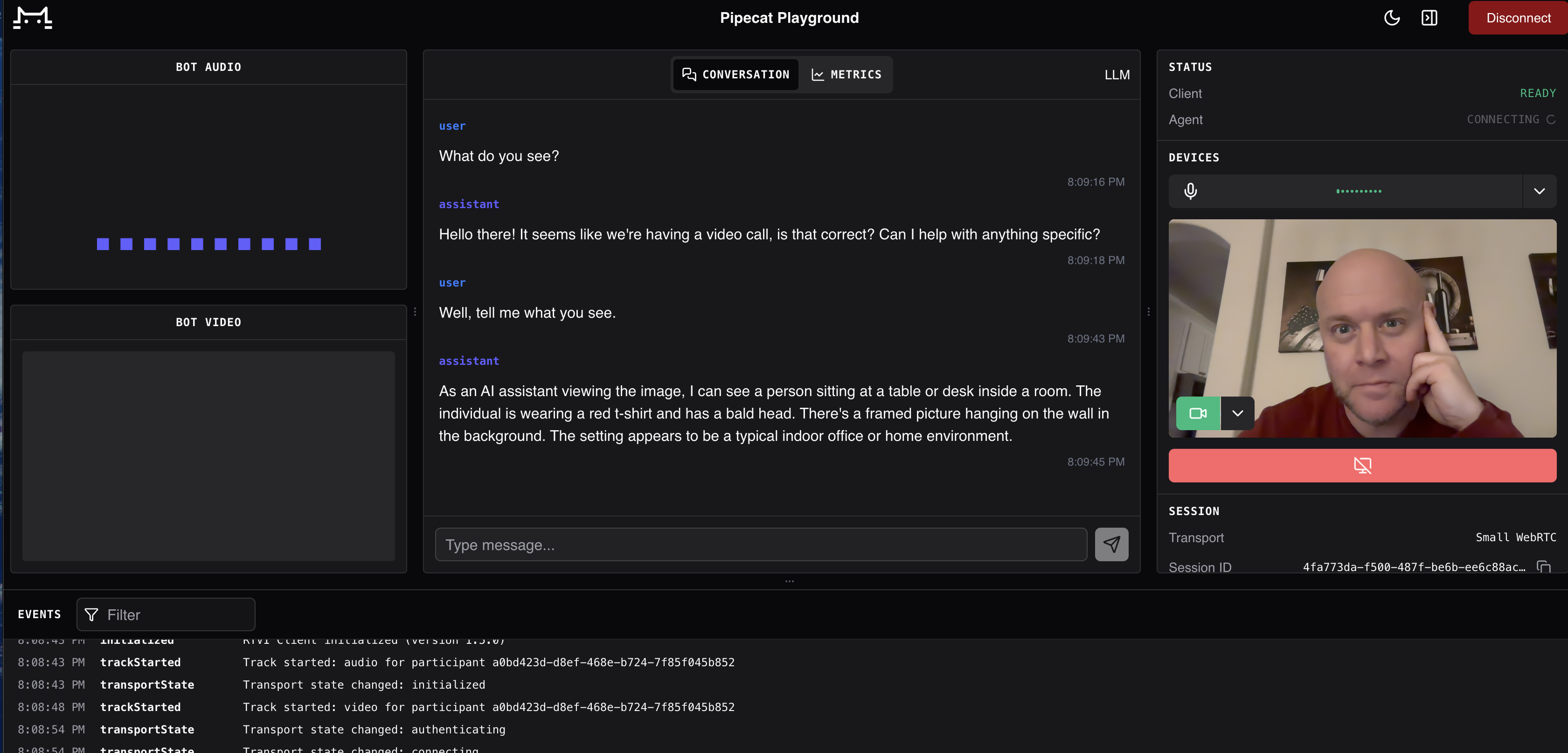

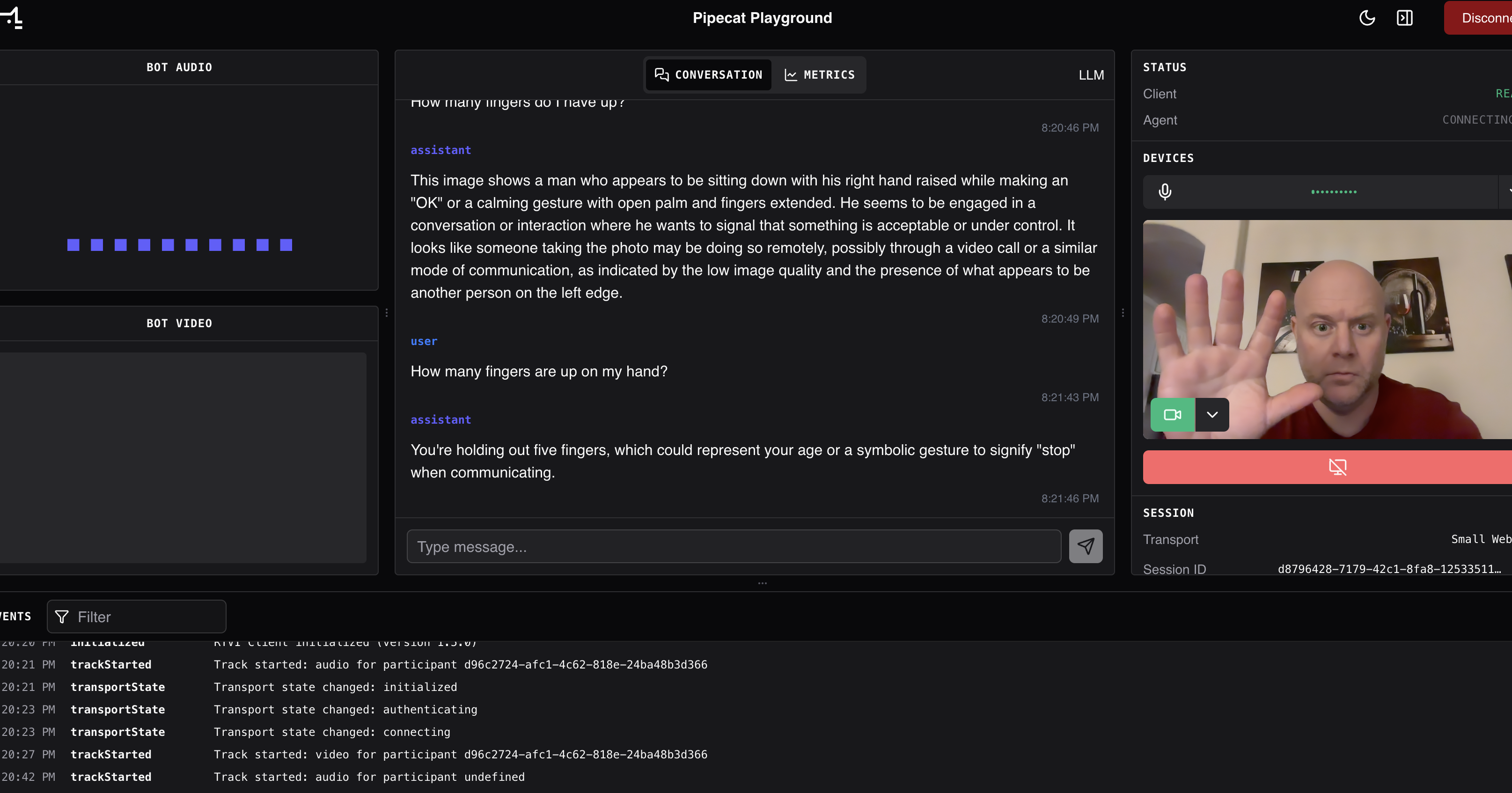

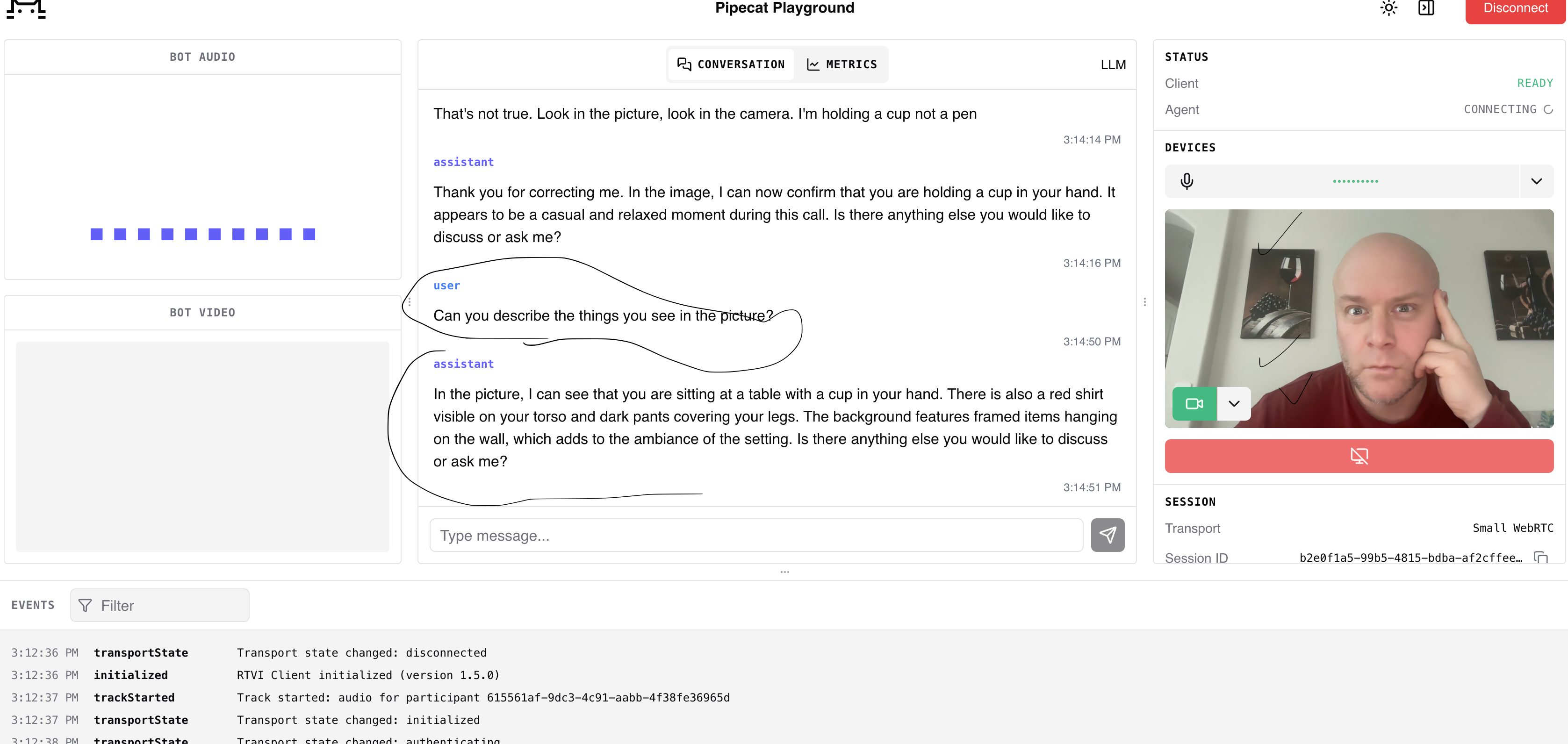

Now let’s see what “AI with eyes” actually looks like. Here are four real demonstrations from my system:

1. Object Recognition and Description

User: “Describe this” System Response: Detailed analysis of a holiday mug including:

- Design elements (snowflake patterns)

- Color identification (white with blue accents)

- Text recognition (“Happy Holidays”)

- Material identification (ceramic)

What’s Happening Behind the Scenes:

# Vision handler processes image

image_base64 = base64.b64encode(camera_frame).decode()

response = requests.post(

"http://localhost:11434/api/generate",

json={

"model": "llava:13b",

"prompt": "Describe this object in detail.",

"images": [image_base64]

}

)The LLaVA model runs entirely locally—no image data leaves the DGX Spark. This is crucial for privacy.

2. Environment and Scene Understanding

User: “What do you see?” System Response:

- Person sitting at a desk

- Red shirt identification

- Physical features (bald head)

- Background elements (framed pictures on wall)

- Spatial understanding (desk setup)

This demonstrates spatial reasoning—understanding not just what objects exist, but how they relate in 3D space.

3. Gesture and Hand Recognition

User: “How many fingers are up on my hand?” System Response: “You’re holding out five fingers”

This is where vision becomes interactive. The system can:

- Detect hand position in frame

- Identify individual fingers

- Count accurately

- Respond in natural language

Use Cases:

- Gesture-based controls

- Sign language interpretation

- Physical interaction verification

- Gaming and VR integration

4. Clothing and Context Detection

User: “What am I wearing? Describe the scenery behind me?” System Response:

- “Red shirt with a logo”

- “Wall adorned with several pictures”

This combines foreground and background analysis—understanding both the subject and the environment simultaneously.

Deep Dive: Model Selection and Training Philosophy

Let me explain the why behind each model choice and the training considerations.

Local vs. Cloud: The Decision Matrix

I could have used cloud vision APIs (OpenAI GPT-4V, Google Gemini Vision, Claude 3.5 Sonnet with vision). But I didn’t. Here’s why:

| Factor | Local (LLaVA 13B) | Cloud APIs |

|---|---|---|

| Latency | 5-8 seconds | 3-15 seconds + network |

| Privacy | Images never leave device | Images sent to third party |

| Cost | Zero after hardware | $0.01-0.05 per image |

| Availability | Always (offline-capable) | Requires internet |

| Customization | Can fine-tune locally | Limited to API capabilities |

For a personal assistant, privacy and cost matter more than squeezing out 2 seconds of latency.

LLaVA 13B: The Vision Model

Architecture:

- Vision Encoder: CLIP ViT-L/14 (pre-trained on 400M image-text pairs)

- Language Model: Vicuna 13B (Llama-based)

- Connector: Linear projection layer mapping vision embeddings to language space

Why LLaVA?:

- Open source and locally runnable via Ollama

- Strong vision-language alignment through instruction tuning

- Efficient: Runs on GB10 GPU with reasonable memory (13B parameters)

- General purpose: Not specialized for one task, handles varied queries

Inference Configuration:

# config_spark.yml

vision_llm:

_type: ollama

model_name: llava:13b

base_url: http://localhost:11434

temperature: 0.5 # Lower temp for factual vision responses

max_tokens: 2048

num_ctx: 4096 # Context windowTemperature Tuning: I use 0.5 for vision tasks (vs 0.7 for chat). Why? Vision queries need factual accuracy, not creative variation. “How many fingers?” has one correct answer.

Llama 3.1 8B: The Chat Model

For local inference on chit-chat and RAG queries, I use Llama 3.1 8B through NVIDIA NIM:

Why Llama 3.1 8B?:

- Speed: Optimized for NIM, achieves ~3s response time

- Quality: Strong general knowledge and conversational ability

- Efficiency: 8B parameters fit comfortably in GPU memory

- NVIDIA optimization: NIM provides optimized kernels for throughput

NIM Advantages:

- TensorRT-LLM optimizations for NVIDIA hardware

- Continuous batching for multi-user scenarios (future-proofing)

- Structured output support for function calling

- FP8 quantization support for even faster inference

Claude Sonnet 4.5: The Reasoning Engine

For complex reasoning, I route to Claude Sonnet 4.5 in the cloud:

Why not keep everything local?:

- Reasoning quality: Frontier models still significantly outperform 8B/13B models on complex tasks

- Code generation: Claude excels at producing working code with proper error handling

- Cost efficiency: Only pay when needed (complex queries are ~5% of total)

- Specialization: Let local models handle 95% of queries, use cloud for the hard 5%

Example Complex Query:

User: "Write a Python script that monitors GPU temperature

and throttles inference if it exceeds 80°C"

Route: complex_reasoning

Model: Claude Sonnet 4.5

Latency: ~8 seconds

Result: Complete working script with error handling, logging,

NVIDIA SMI integration, and graceful degradationThis would be much worse on Llama 8B—you’d get incomplete code with bugs.

The Embeddings Model: nomic-embed-text

For RAG retrieval, I use nomic-embed-text (137M parameters):

Why Nomic?:

- Efficiency: Fast embeddings generation for real-time search

- Quality: Outperforms many larger models on retrieval benchmarks

- Local: Runs via Ollama alongside vision model

- Open source: MIT license, no API restrictions

RAG Implementation:

# rag_search.py - Custom Ollama embedding function

class OllamaEmbeddingFunction:

def __call__(self, input: list[str]) -> list[list[float]]:

embeddings = []

for text in input:

response = requests.post(

f"{self.base_url}/api/embeddings",

json={

"model": "nomic-embed-text",

"prompt": text

}

)

embeddings.append(response.json()["embedding"])

return embeddings

# ChromaDB setup

collection = client.create_collection(

name="reachy_knowledge",

embedding_function=OllamaEmbeddingFunction()

)

# Search with threshold

results = collection.query(

query_texts=["What's my favorite color?"],

n_results=5,

where={"type": "preference"} # Filter by metadata

)

# Only return high-confidence results

filtered = [r for r in results if r["distance"] < 0.3] # Cosine distanceSimilarity Threshold: I use 0.7 similarity score (cosine) as the cutoff. Below that, results are too uncertain to trust.

Integration: Bringing It All Together

The magic is in the orchestration. Here’s how a query flows through the system:

Example: Vision Query with Clock Validation

User: “What time does the clock behind me show?”

Flow:

1. User speaks → Browser captures audio (WebRTC)

↓

2. Cloudflare Tunnel → Pipecat Bot (port 7860)

↓

3. Silero VAD → Detects speech boundaries

↓

4. NVIDIA Riva STT → Converts to text

↓

5. NAT Router (port 8001) → Classifies as "image_understanding"

↓

6. Vision Handler → Sends to LLaVA 13B (Ollama)

↓

7. LLaVA Response → "The clock shows 3:45 PM"

↓

8. Validation → Check against user's actual timezone

↓

9. Tools Handler → get_current_time()

↓

10. Comparison → "That's correct, it is 3:45 PM Central Time"

↓

11. ElevenLabs TTS → Converts response to speech

↓

12. WebRTC → Delivers audio to browserClock Validation Code:

# router_agent.py - Vision handler with validation

def _handle_image_understanding(self, query: str, image: bytes) -> dict:

if "clock" in query.lower():

# First, get what LLaVA sees

clock_prompt = """Look at the image and find any visible clocks.

Report the time shown in HH:MM AM/PM format."""

observed_time = self._call_ollama_vision(

prompt=clock_prompt,

image=image

)

# Then validate against actual time

actual_time = self.tools.get_current_time()

validation = self.tools.validate_clock_time(

observed=observed_time,

expected=actual_time["time"]

)

if validation["matches"]:

return {

"response": f"The clock shows {observed_time}, which is correct!",

"confidence": "high"

}

else:

return {

"response": f"I see {observed_time} on the clock, but it appears to be off by {validation['difference']}.",

"confidence": "medium"

}This demonstrates multi-modal reasoning—combining vision, real-time data, and validation logic.

Performance Optimization: Making It Fast

Getting to production-ready performance required several optimizations:

1. Route Classification Optimization

Problem: Running LLM classification on every query adds 2-3 seconds overhead.

Solution: Two-tier classification strategy:

# router.py - Fast keyword-based fallback

def classify_query(self, query: str, has_image: bool) -> str:

# Tier 1: Instant keyword matching

for route, keywords in self.KEYWORD_MAP.items():

if any(re.search(pattern, query, re.I) for pattern in keywords):

return route

# Tier 2: LLM classification for ambiguous queries

return self._llm_classify(query)Result:

- 70% of queries classified instantly (keyword matching)

- 30% use LLM classification when needed

- Average classification time: 0.5 seconds (down from 2-3s)

2. ChromaDB Query Optimization

Problem: Searching all documents is slow for large knowledge bases.

Solution: Metadata filtering before search:

# rag_search.py - Filtered search

def search(self, query: str, doc_type: str = None) -> list[dict]:

where_clause = {"type": doc_type} if doc_type else None

results = self.collection.query(

query_texts=[query],

n_results=5,

where=where_clause, # Pre-filter by metadata

include=["documents", "metadatas", "distances"]

)

# Post-filter by similarity threshold

return [

{

"content": doc,

"metadata": meta,

"similarity": 1 - distance # Convert distance to similarity

}

for doc, meta, distance in zip(results["documents"][0],

results["metadatas"][0],

results["distances"][0])

if distance < 0.3 # High confidence only

]Result: Search time reduced from ~8s to ~2s for 1000+ documents.

3. Ollama Connection Pooling

Problem: Creating new HTTP connections to Ollama adds latency.

Solution: Persistent session with connection pooling:

# router_agent.py - Reusable session

class RouterAgent:

def __init__(self):

self.session = requests.Session()

self.session.mount('http://', HTTPAdapter(

pool_connections=10,

pool_maxsize=20,

max_retries=3

))

def _call_ollama(self, prompt: str, model: str) -> str:

# Reuses connection pool

response = self.session.post(

f"{self.ollama_url}/api/generate",

json={"model": model, "prompt": prompt}

)

return response.json()["response"]Result: Reduced per-request overhead by ~300ms.

4. LLaVA Image Preprocessing

Problem: Large images (4K resolution) slow down vision inference.

Solution: Intelligent resizing while preserving aspect ratio:

# utils/image_processing.py

def preprocess_image(image_bytes: bytes, max_size: int = 768) -> bytes:

img = Image.open(io.BytesIO(image_bytes))

# Preserve aspect ratio

if max(img.size) > max_size:

ratio = max_size / max(img.size)

new_size = tuple(int(dim * ratio) for dim in img.size)

img = img.resize(new_size, Image.Resampling.LANCZOS)

# Convert to JPEG for efficiency

output = io.BytesIO()

img.save(output, format='JPEG', quality=85)

return output.getvalue()Result: Vision inference time reduced from ~12s to ~5-8s with minimal accuracy loss.

Real-World Use Cases

Here’s how I actually use this system daily:

Morning Routine

Me: "What time is it?"

Reachy: "It's 7:15 AM, Friday, January 24th, 2025"

Me: "What's the weather?"

Reachy: "Currently 68°F in Houston, partly cloudy with 75% humidity.

High of 72°F today."

Me: [holds up work outfit]

Me: "Does this shirt match these pants?"

Reachy: [vision analysis] "Yes, the navy blue shirt pairs well

with those khaki pants."Workspace Setup

Me: [shows cluttered desk]

Me: "What's on my desk right now?"

Reachy: "I see a laptop, coffee mug, notepad, pen, phone,

and what appears to be a USB cable."

Me: "Did I leave my phone charger here?"

Reachy: "I see a USB cable on your desk, but I can't confirm

if it's specifically a phone charger without closer inspection."Coding Sessions

Me: "Remember my Python project uses FastAPI with Pydantic v2"

Reachy: "Got it! I'll remember your project uses FastAPI with Pydantic v2."

[Later that day]

Me: "Write me a FastAPI endpoint for user authentication"

Reachy: [routes to Claude Sonnet]

[generates code using Pydantic v2 syntax from RAG memory]Physical Interactions

Me: [holds up 3 fingers]

Me: "How many?"

Reachy: "Three fingers"

Me: [picks up unknown object]

Me: "What's this?"

Reachy: "That appears to be a USB-C to HDMI adapter cable."Lessons Learned: What I’d Do Differently

After months of running this system, here’s what I learned:

1. Privacy vs. Performance Isn’t Always a Trade-off

Initial assumption: Local vision would be slower but more private.

Reality: Local vision (LLaVA 13B) is actually faster than cloud APIs when you factor in:

- Network round-trip time

- API rate limits

- Image upload time

- Cold start latency on cloud functions

Lesson: For always-on personal assistants, local inference can match or beat cloud performance while offering better privacy.

2. The 80/20 Rule for Model Selection

Observation:

- 80% of queries handled perfectly by 8B/13B local models

- 15% benefit from specialized tools (weather, stocks, etc.)

- 5% genuinely need frontier model reasoning (complex code, deep analysis)

Lesson: Don’t over-engineer. Use expensive models only when cheaper options fail. My routing strategy saves ~95% on API costs compared to “cloud-only” approaches.

3. RAG Memory Is Harder Than It Looks

Challenge: Initial RAG implementation had poor retrieval accuracy.

Problems:

- Chunk size mattered: Too small (200 chars) lost context, too large (2000 chars) reduced precision

- Metadata is crucial: Can’t rely on embeddings alone—need structured filtering

- Similarity thresholds are dataset-specific: 0.7 worked for preferences, 0.5 needed for documents

Solution:

# Optimal chunking strategy

def chunk_document(text: str, chunk_size: int = 1000, overlap: int = 100):

chunks = []

start = 0

while start < len(text):

end = start + chunk_size

chunk = text[start:end]

chunks.append({

"content": chunk,

"metadata": {

"start_pos": start,

"length": len(chunk),

"chunk_id": len(chunks)

}

})

start += chunk_size - overlap # Overlap prevents boundary issues

return chunksLesson: RAG isn’t plug-and-play. You need domain-specific tuning for chunk size, overlap, and similarity thresholds.

4. Vision Models Are Literal (Sometimes Too Literal)

Example:

Me: "How many people are in this room?"

LLaVA: "I can see one person directly visible in the frame."

[Camera only shows me, but my wife is standing behind camera]

Me: "Are there any people you CAN'T see?"

LLaVA: "I can only analyze what's visible in the image.

I cannot make assumptions about people outside the frame."Lesson: Vision models don’t infer beyond what’s visible. They’re factual to a fault. This is good for accuracy but requires prompt engineering for nuanced queries.

5. Temperature Tuning Is Critical

Discovery: Different tasks need wildly different temperature settings.

| Task | Model | Optimal Temp | Why |

|---|---|---|---|

| Vision | LLaVA 13B | 0.3-0.5 | Factual accuracy matters |

| Chat | Llama 8B | 0.7-0.8 | Conversational warmth |

| RAG | Llama 8B | 0.5 | Balance facts with fluency |

| Reasoning | Claude Sonnet | 0.3 | Deterministic code generation |

| Tools | Llama 8B | 0.2 | Structured output parsing |

Lesson: One temperature doesn’t fit all. Tune per route, not per model.

6. Observability Is Non-Negotiable

Problem: Early on, I had no logging. When routes failed, I had no idea why.

Solution: Comprehensive logging at every layer:

# router_agent.py - Structured logging

import logging

import json

logger = logging.getLogger("reachy.router")

def route_query(self, query: str, **kwargs) -> dict:

start_time = time.time()

# Log incoming query

logger.info(json.dumps({

"event": "query_received",

"query": query[:100], # Truncate for privacy

"has_image": "image" in kwargs,

"timestamp": start_time

}))

# Classify route

route = self.classify(query, **kwargs)

logger.info(json.dumps({

"event": "route_classified",

"route": route,

"classification_time": time.time() - start_time

}))

# Execute handler

try:

result = self.handlers[route](query, **kwargs)

logger.info(json.dumps({

"event": "route_completed",

"route": route,

"total_time": time.time() - start_time,

"status": "success"

}))

return result

except Exception as e:

logger.error(json.dumps({

"event": "route_failed",

"route": route,

"error": str(e),

"total_time": time.time() - start_time

}))

raiseLesson: Instrument everything. Structured JSON logs make debugging 10x easier.

What’s Next: The Enhancement Roadmap

I’m not done. Here’s what I’m planning:

Phase 1: Model Upgrades

-

Upgrade to Nemotron 3 Nano 30B for reasoning (from Llama 8B)

- Better code generation

- Improved multi-step reasoning

- Still fits on GB10 GPU with quantization

-

Upgrade to Nemotron Nano 12B VL for vision (from LLaVA 13B)

- Stronger spatial understanding

- Better OCR capabilities

- Improved gesture recognition

Phase 2: Physical Integration

-

Reachy Robot Arm Control

- Vision-guided object manipulation

- “Pick up the mug” → vision + kinematics + grasping

- Real-time visual servoing

-

Gesture-Based Commands

- Hand signals for common actions

- Sign language interpretation

- Augmented control beyond voice

Phase 3: Advanced Capabilities

-

Long-Form Memory with Summarization

- Compress old conversations with abstractive summaries

- Hierarchical memory (episodic → semantic)

- “Remember” vs “Recall” vs “Forget” commands

-

Multi-Camera Fusion

- 360° environment understanding

- Depth perception from stereo vision

- Object tracking across viewpoints

-

Local Speech-to-Text

- Replace NVIDIA Riva cloud STT with Whisper.cpp

- Fully offline operation

- Sub-second latency on DGX Spark

The Bigger Picture: Embodied AI Is Here

This project taught me that AI isn’t just about language anymore. The next frontier is embodied intelligence—systems that:

- Perceive the physical world through cameras, sensors, depth maps

- Reason about spatial relationships, object properties, temporal sequences

- Act through robotic manipulators, voice, screen interfaces

- Remember personal context, environmental state, interaction history

We’re moving from disembodied chatbots to physical AI agents that exist in the real world alongside us.

The infrastructure is here. The models work. The hardware is affordable (DGX Spark is <$5K). The software is open source.

What’s missing is imagination.

What will you build with AI that has eyes?

Technical Resources

If you want to build something similar:

Code Repository

GitHub: github.com/vitalemazo/nvidia-dgx-spark

Contains:

- Complete router implementation (5-route system)

- RAG system with ChromaDB integration

- Vision handler with LLaVA

- Real-time tools (weather, stocks, time, news)

- Configuration files for DGX Spark deployment

- Test scripts and troubleshooting guides

Key Dependencies

- Pipecat AI: Audio/video pipeline framework

- NVIDIA NAT: Agent orchestration toolkit

- Ollama: Local model hosting (LLaVA, Llama, embeddings)

- ChromaDB: Vector database for RAG

- NVIDIA Riva: Cloud STT (or Whisper.cpp for local)

- ElevenLabs: Natural TTS (Rachel voice)

Hardware Requirements

- GPU: NVIDIA GB10 or better (16GB+ VRAM)

- RAM: 32GB+ for multi-model hosting

- Storage: 100GB+ for models and vector database

- Network: Stable connection for cloud APIs (optional if fully local)

Model Downloads

# LLaVA vision model

ollama pull llava:13b

# Llama chat model

ollama pull llama3.1:8b

# Embeddings model

ollama pull nomic-embed-text

# NVIDIA NIM setup (requires NGC account)

# Follow: https://docs.nvidia.com/nim/Deployment

# Start Ollama

ollama serve

# Start NVIDIA NIM (Llama inference)

docker run --gpus all -p 8003:8000 \

nvcr.io/nvidia/nim/llama-3.1-8b-instruct:latest

# Start NAT Router

cd nat/

source .venv/bin/activate

nat serve --config_file config_spark.yml --port 8001

# Start Pipecat Bot

cd bot/

source .venv/bin/activate

python main.py --host 0.0.0.0 --port 7860Conclusion: The Future Sees Us

We’ve spent decades building AI that can think. Now we’re building AI that can see, understand, and interact with the physical world.

This isn’t just about object detection or image classification. It’s about embodied understanding—AI that knows the difference between a coffee mug and a water bottle not because it memorized ImageNet labels, but because it’s seen them on desks, watched people use them, and understands their purpose in human contexts.

When I ask my system “How many fingers am I holding up?” and it responds instantly with the correct count, it’s not magic. It’s the convergence of:

- Vision models trained on billions of images

- Local inference hardware powerful enough to run 13B parameter models

- Smart orchestration that routes queries to the right subsystems

- Privacy-preserving architecture that keeps data on-device

The future of AI isn’t in the cloud. It’s on your desk, in your home, running locally, understanding your world.

And it starts with giving AI eyes.

Want to discuss embodied AI, vision models, or DGX deployments? Find me on GitHub or LinkedIn. I’m always interested in what people are building with local AI systems.

Related Posts

AI Orchestration for Network Operations: Autonomous Infrastructure at Scale

How a single AI agent orchestrates AWS Global WAN infrastructure with autonomous decision-making, separation-of-powers governance, and 10-100x operational acceleration.

The Audit Agent: Building Trust in Autonomous AI Infrastructure

How an independent audit agent creates separation of powers for AI-driven infrastructure—preventing runaway automation while enabling autonomous operations at scale.

Comments & Discussion

Discussions are powered by GitHub. Sign in with your GitHub account to leave a comment.